In the age of artificial intelligence, the most valuable assets are time and trust—yet the trust gap remains unresolved.

As AI systems play an increasingly critical role in healthcare, finance, criminal justice, and government, the ability to verify how these systems make decisions has become essential and non-negotiable. While AI enhances efficiency, productivity, and capability, it also introduces real risks—such as misinformation, opaque decision-making, and unintended actions—that can have profound real-world consequences.

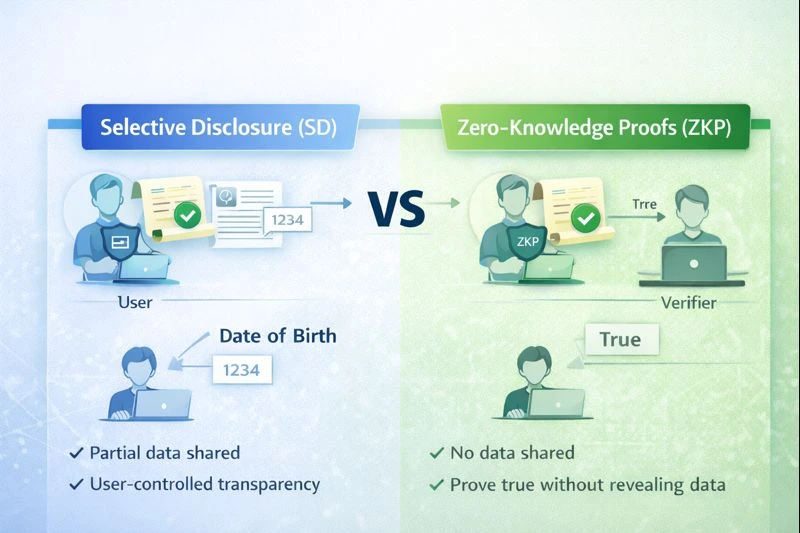

Regulatory frameworks and AI governance policies are evolving to address these challenges. However, anyone building or deploying AI systems knows that compliance alone does not create trust . What is required is a technological trust layer —such as Verifiable AI —that uses cryptographic proofs, verifiable credentials, and distributed ledger technology (DLT) to make AI systems transparent, accountable, and trustworthy across digital identity and automated decision-making workflows.

What Is Verifiable AI?

Verifiable AI is a framework for building AI systems that are transparent, auditable, and accountable by design . Unlike traditional black box AI systems , where it is difficult—or impossible—to understand how decisions are made, Verifiable AI introduces mechanisms for decision traceability and provable trust .

At a practical level, this means moving away from “trust us, the model works” toward systems that can prove the authenticity, integrity, and origin of their actions . Verifiable AI systems and autonomous agents achieve this using cryptographic proofs, verifiable credentials, and distributed ledger technology (DLT) .

At its core, Verifiable AI enables organizations, regulators, and end users to answer three critical questions confidently:

- Who created or deployed this AI system?

- What data and models was it trained on?

- Can its decisions and actions be independently traced, audited, and verified?

Why Verifiable AI Matters: The Risks of Black Box AI

Black Box AI refers to systems that generate outputs from given inputs while leaving the internal reasoning opaque —including how decisions were reached, what data was used for training, and whether that data came from trusted sources. This lack of transparency creates serious challenges around trust, accountability, and explainability .

For organizations operating in regulated or high-impact environments, these challenges translate directly into risk: brand damage, legal exposure, compliance failures, and regulatory penalties . As AI systems increasingly influence critical outcomes, the inability to audit and verify AI decisions becomes a liability rather than a technical limitation.

This is where ethical AI and Verifiable AI converge—not as abstract ideals, but as operational requirements . Ethical AI emphasizes responsible and accountable systems, while Verifiable AI provides the technical foundation to make those principles enforceable in real-world deployments.

How Verifiable Credentials Power Verifiable AI

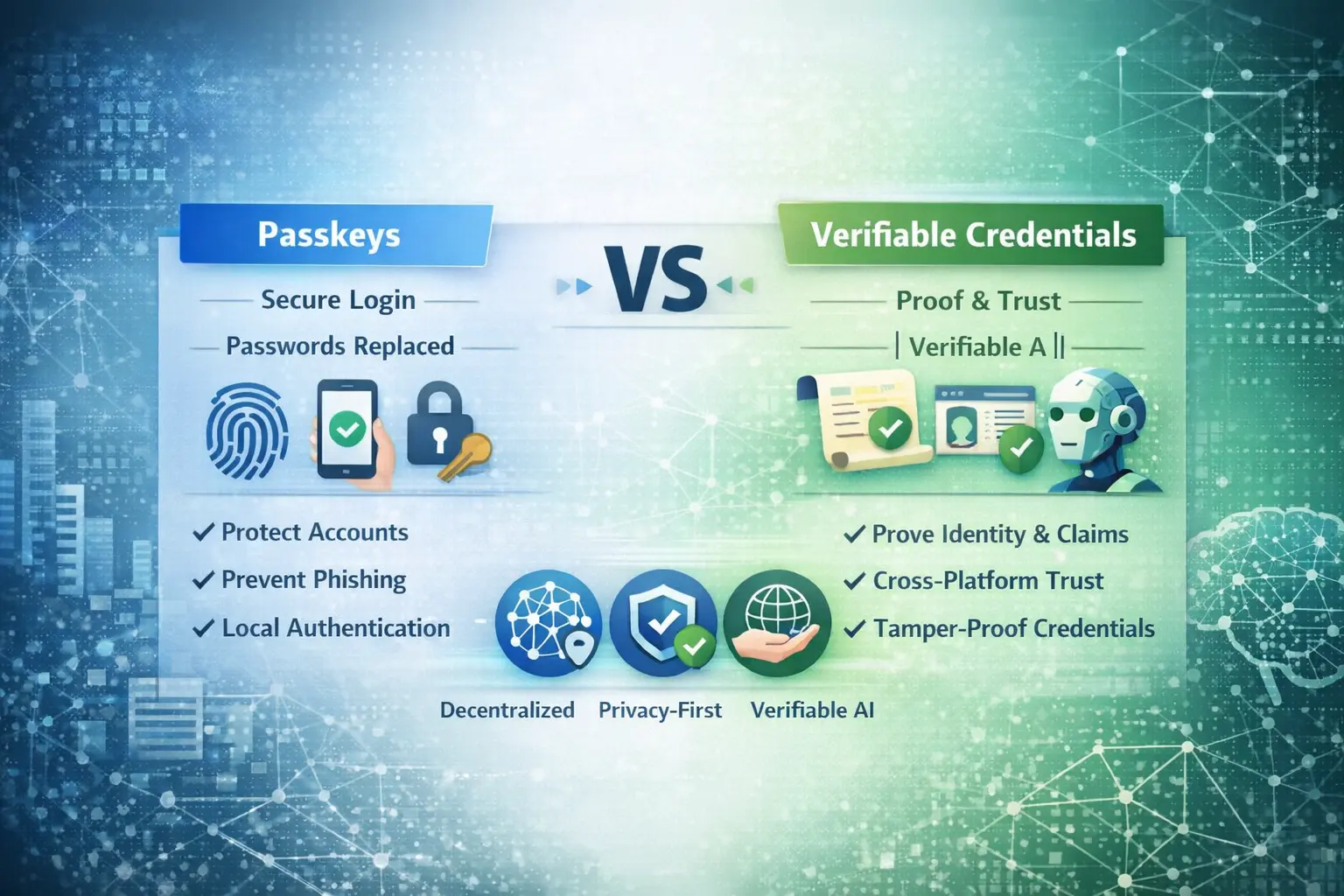

Verifiable AI and Verifiable Credentials are often discussed separately, but in practice, they are inseparable. Verifiable AI defines the goal; Verifiable Credentials make it enforceable.

Verifiable Credentials act as cryptographic proof layers that allow AI systems to demonstrate identity, authority, compliance, and decision integrity.

Proving the Identity and Source of the AI

Verifiable AI requires that an AI agent can prove its authenticity and origin.

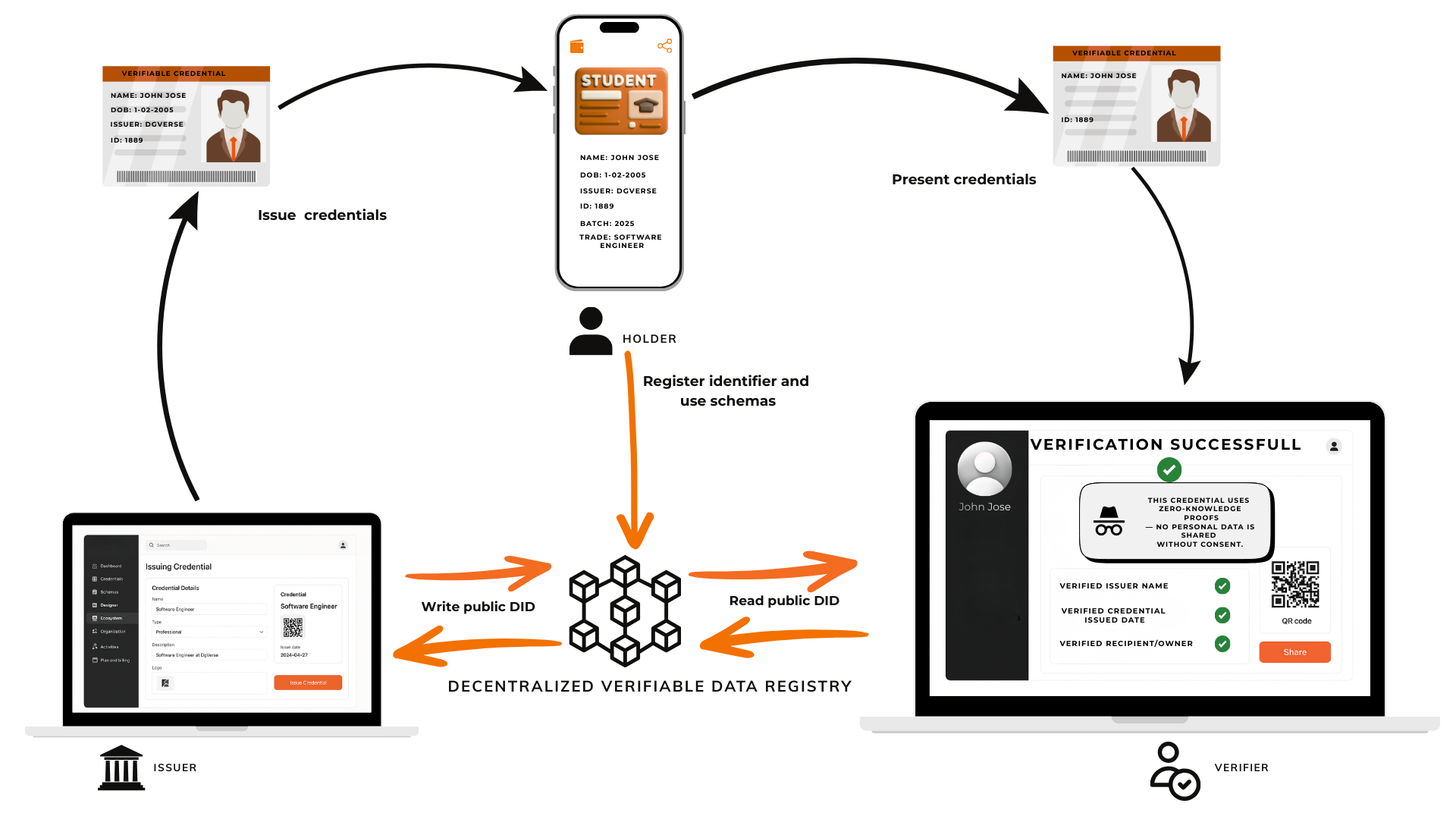

Verifiable Credentials function as a digital passport for AI agents.

- Authentication: An AI agent is issued a unique Decentralized Identifier (DID) anchored on a DLT, which it uses to sign actions and requests.

- Mutual Trust: For example, a bank’s AI agent can present a Verifiable Credential to a customer to prove it is an authorized representative before any sensitive interaction takes place.

Validating Training Data and Ethical Compliance

One of the core concerns with AI systems is hidden or biased training data.

Verifiable Credentials address this by providing cryptographically signed proof of an AI system’s foundations.

- Data Integrity: Credentials can verify the origin and integrity of datasets used to train AI models.

- Regulatory Proof: AI systems can present credentials issued by regulators or auditors to prove compliance with ethical guidelines or data standards.

Creating Auditable “Receipts” of Decisions

Verifiable AI demands traceability—every decision should be reviewable after the fact.

- Tamper-Evident Records: Verifiable Credentials can act as decision “receipts,” forming audit trails that show which policies or rules influenced a specific outcome.

- Accountability: These records serve as cryptographic certificates of compliance that regulators and auditors can independently verify.

Enabling Privacy-Preserving Interactions

A key advantage of Verifiable Credentials is that they enable trust without sacrificing privacy.

- Selective Disclosure: AI systems can prove specific attributes (e.g., age eligibility) without revealing full identity details.

- Zero-Knowledge Proofs (ZKPs): AI agents can prove they followed defined rules—such as avoiding restricted data—without exposing sensitive information.

Verifiable AI in Action: Real-World Use Cases Bridging the Trust Gap

Healthcare: Verifying Diagnostics and Patient Safety

In healthcare, the cost of an AI error can be life-threatening.

- Credentialed Diagnostics: Medical AI systems can present Verifiable Credentials proving their training data meets regulatory standards.

- Audit Trails: Providers can review decision rationales to ensure alignment with clinical protocols.

- Example: A hospital AI assistant can verify its authorization before accessing patient records, supporting compliance with regulations like GDPR.

Banking and Finance: Explaining AI-Driven Decisions

In finance, “the AI decided” is not a valid explanation.

- Fairness Audits: Verifiable AI allows loan decisions to be traced and audited for bias.

- Fraud Detection: Tamper-proof decision logs reduce false positives and improve transparency.

- DeFi Use Case: Trading bots can use DIDs to prove authorized control over on-chain transactions.

Content Integrity: Combating Deepfakes and Misinformation

As synthetic media becomes harder to detect, provenance matters.

- Source Authentication: AI-generated content can carry cryptographic signatures proving origin.

- Journalism: News organizations can verify content integrity before publication.

- Creative Platforms: Digital identifiers help protect intellectual property and originality.

Agentic AI: Secure Delegation and Negotiation

As AI agents act on behalf of users, trust becomes critical.

- Delegated Authority: Agents can prove limited spending authority without exposing full financial data.

- Privacy-Preserving Commerce: Selective disclosure enables age or eligibility verification without identity leakage.

- Human Binding: Liveness checks and biometric verification ensure agents act with legitimate authorization.

Conclusion: Verifiable AI Is Like a Passport System for Trust

Think of Verifiable AI the same way you think about international travel. A passport does not explain who you are, but it proves a few critical facts: who issued it, that it hasn’t been tampered with, and that it is valid at the time of inspection. Border control doesn’t rely on claims—it verifies cryptographic proof from a trusted authority.

Verifiable AI works the same way. Instead of blindly trusting an AI system’s output, it ensures that every action and decision can be proven—who deployed the AI, what it is authorized to do, and whether it followed the required rules. This is where Verifiable Credentials play a critical role: they act as the visas attached to the AI’s passport, defining scope, permission, and compliance, and making trust verifiable, enforceable, and reusable as AI systems operate across platforms and ecosystems.

Where DgVerse Fits In

Verifiable AI is not just a concept—it requires real infrastructure to issue, manage, and verify trust at scale.

DgVerse provides a B2B SaaS unified platform that enables organizations to:

If your organization is deploying AI systems where trust, compliance, and accountability matter, DgVerse helps you move from black-box decisions to verifiable, revenue-generating digital trust.

Talk to us about building Verifiable AI